It’s 2025. You might expect R&D to be a cutting-edge field that’s highly automated, powered by AI, and shining with futuristic tools. And of course, some recent breakthroughs are remarkable: lab-grown meat and gene editing just to name a few. But whilst some tools are being embraced to help scientists reach new targets, most lab methods are still archaic.

Are labs embracing new technology?

The reality is that laboratories prefer traditional approaches in most stages of the R&D flow. Many are still operating much the same as they did a century ago, with the primary upgrade being logging results on Excel spreadsheets instead of paper notebooks. In an era of AI-driven insights, this is simply not good enough.

Based on the R&D discoveries we have seen in biology labs over the last 100 years, it’s fair to say that this sector is deserving of tech advancements. The capabilities are endless: solving the global food shortage, developing more life-saving drugs, and even simply removing harsh chemicals from foods, as encouraged by the latest US government.

One common argument against automating experiments in the field is, “If it isn’t broken, don’t fix it.” But if we applied that same logic elsewhere, we’d still be riding horses instead of driving cars. The fact that something functions fine as is doesn't mean it can’t be improved; it just means the potential for progress is waiting to be unlocked.

Another common belief against automation is that R&D and microbiology is “too unpredictable” to automate: too many variables, too much complexity, not rigid enough in the way it acts. It’s very understandable that, at first glance, R&D might seem resistant to the structured logic of technology and AI models. After all, living systems rarely behave like proper equations, and the field has long relied on human intuition and hands-on experience.

However, this assumption is misguided.

Why the current lab flow is, in fact, broken

The first and clearest sign that lab workflows are fundamentally broken is the continued reliance on human observation for plate analysis. In a recent study published in 2016, Baker, M. suggests that 70% of scientists struggle to reproduce others’ experiments 1. Human observation is naturally prone to fatigue, error, and bias. This is not to diminish the irreplaceable role of scientists as their expertise is essential for interpreting results and driving discovery, but it is just a fact that relying on humans to perform repetitive, precision-dependent plate analysis will introduce unavoidable variability and limit throughput.

Even when results happen to be consistent across scientists, R&D has been facing a bigger, and more threatening issue. In a 2012 paper by Scannell et al., it was observed that the number of new drugs approved per billion dollars spent on R&D has halved every nine years since 19502. This stands in stark contrast to computing, where Moore’s law reflects a steady acceleration of technological progress.This is not just a budgetary concern; it directly threatens the pace of innovation. As regulatory and technical requirements become increasingly stringent, the margin for inefficiency narrows. If scientists do not adapt by using their time and resources more strategically, we risk stalling the very breakthroughs that drive the field forward.

Finally, although not an exhaustive list by any means, if we are serious about reversing these trends, R&D data cannot remain locked in paper notebooks or static Excel sheets. As a self-proclaimed data nerd, I see information stored this way is inherently siloed, difficult to search, and nearly impossible to integrate into broader analytical workflows. It slows cross-team collaboration, limits the rapid pattern recognition that fuels breakthroughs, and leaves critical work vulnerable to permanent loss from something as simple as a computer failure. The labs need to modernise and use updated cloud systems with strengthened security, rather than data sharing via an email.

Change management is always going to be challenging. Not too long ago, factories were filled with manual laborers performing hazardous, repetitive tasks to produce goods at scale. Today, those environments are largely automated, and the workforce has adapted with new skills. This wasn’t an easy change for the sector, but it was undoubtedly for the best. The R&D sector will inevitably follow a similar transformation, provided it embraces the tools available.

R&D is an ideal candidate for AI

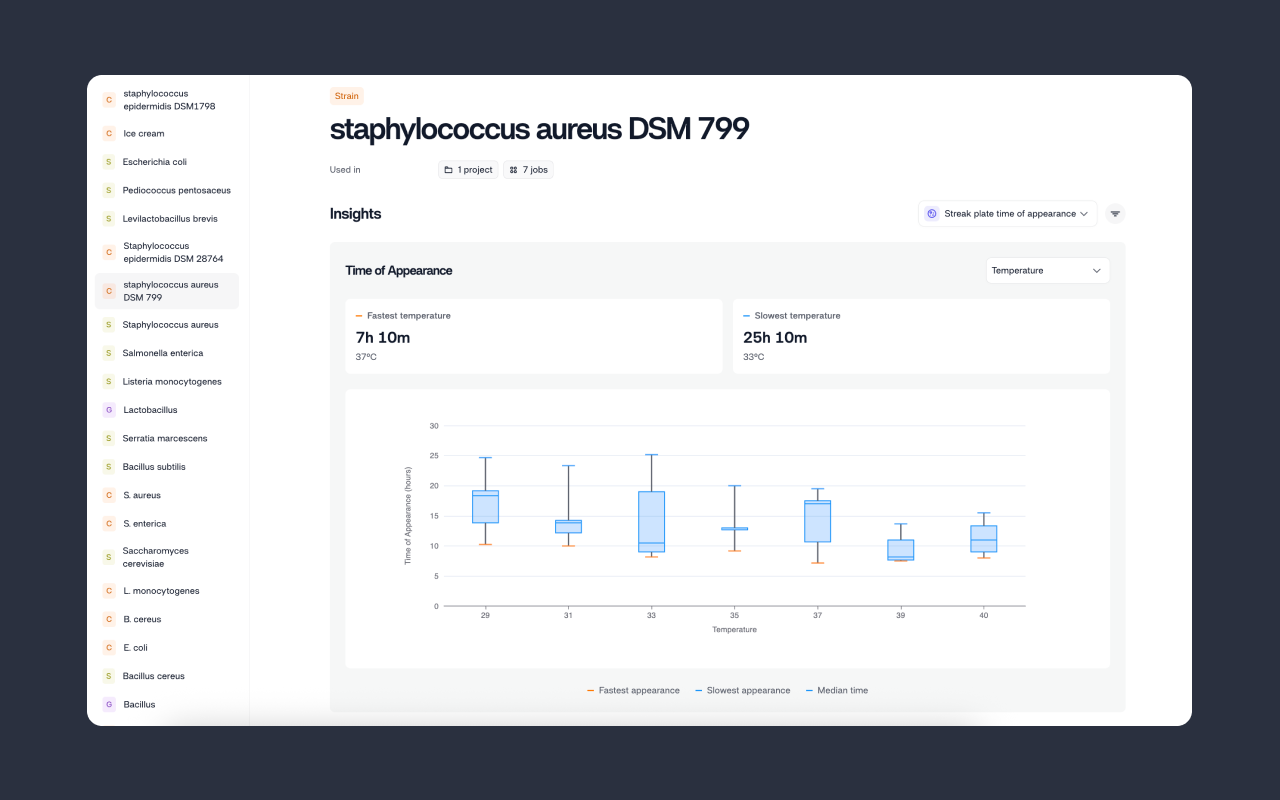

The often unpredictability of organisms doesn’t make automation impossible, it makes it essential. The very complexity that seems like an AI barrier-to-entry is exactly what modern tools, data analysis, and adaptive AI models are designed to navigate and learn from.

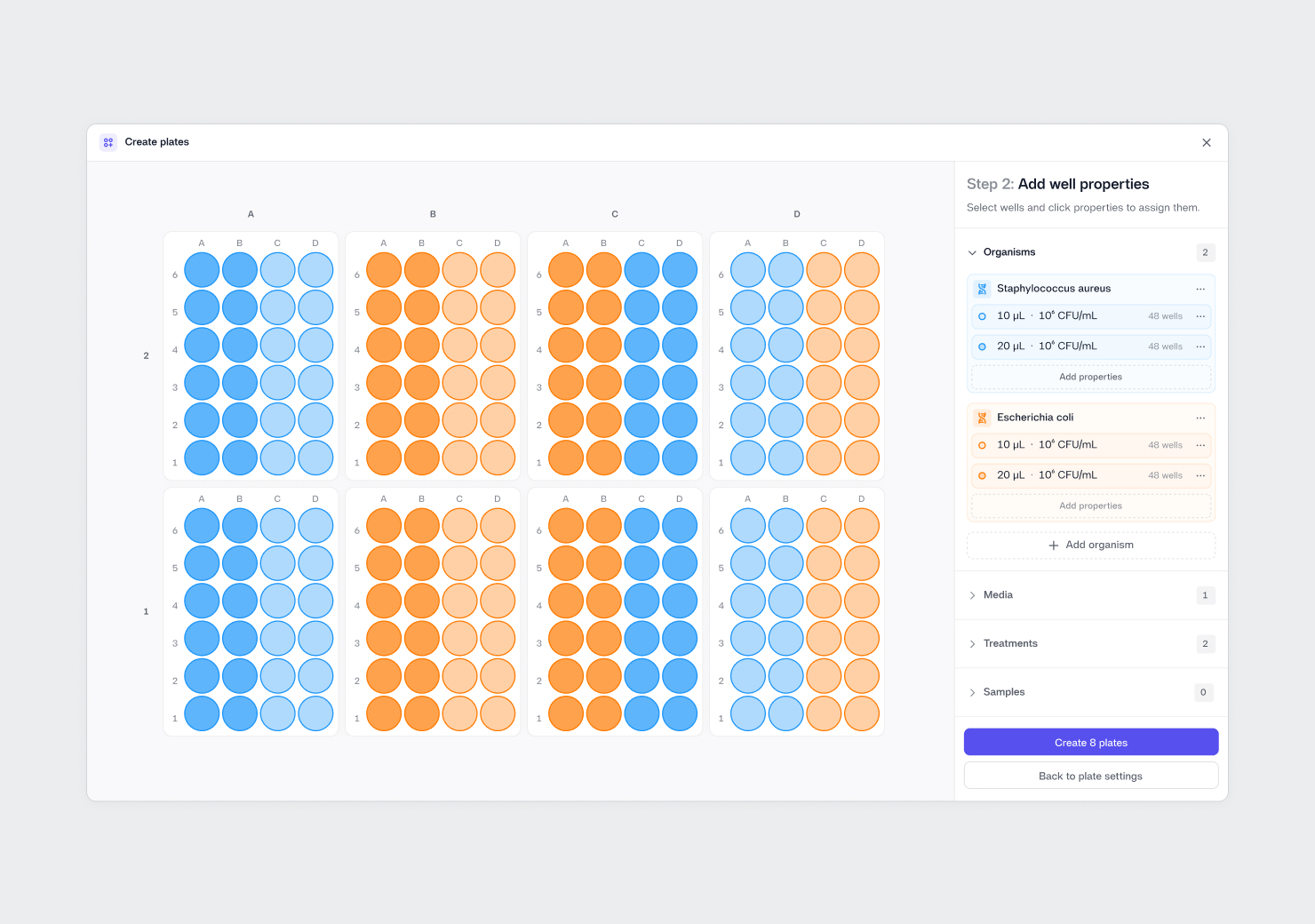

Scientific experiments offer, in theory, precisely the kind of structured, high-quality data that AI systems excel at processing. Variables such as temperature, duration, and results are all meticulously recorded. In a good quality experiment, only one independent variable changes at a time, creating the perfect conditions for predictive modelling.

In all honesty, the labs aren’t there yet in terms of data quality. Before we can reap the benefits of AI in the labs, the incredibly rich data from experiments has to be normalised into an AI-readable structure. After that, the possibilities are endless.

Predictive modeling is a fascinating innovation that biology labs are currently missing out on, where with enough data we can begin to make strong assumptions on how we expect organisms to behave, and we can then use this information to fuel experiments. By feeding historical experimental data into AI systems, we can forecast outcomes, optimise processes, and even design experiments with higher success rates. Over time, such systems could drastically reduce time-to-result, potentially from years to months, by drawing on comprehensive datasets of organisms and environmental conditions. Eroom’s law no more.

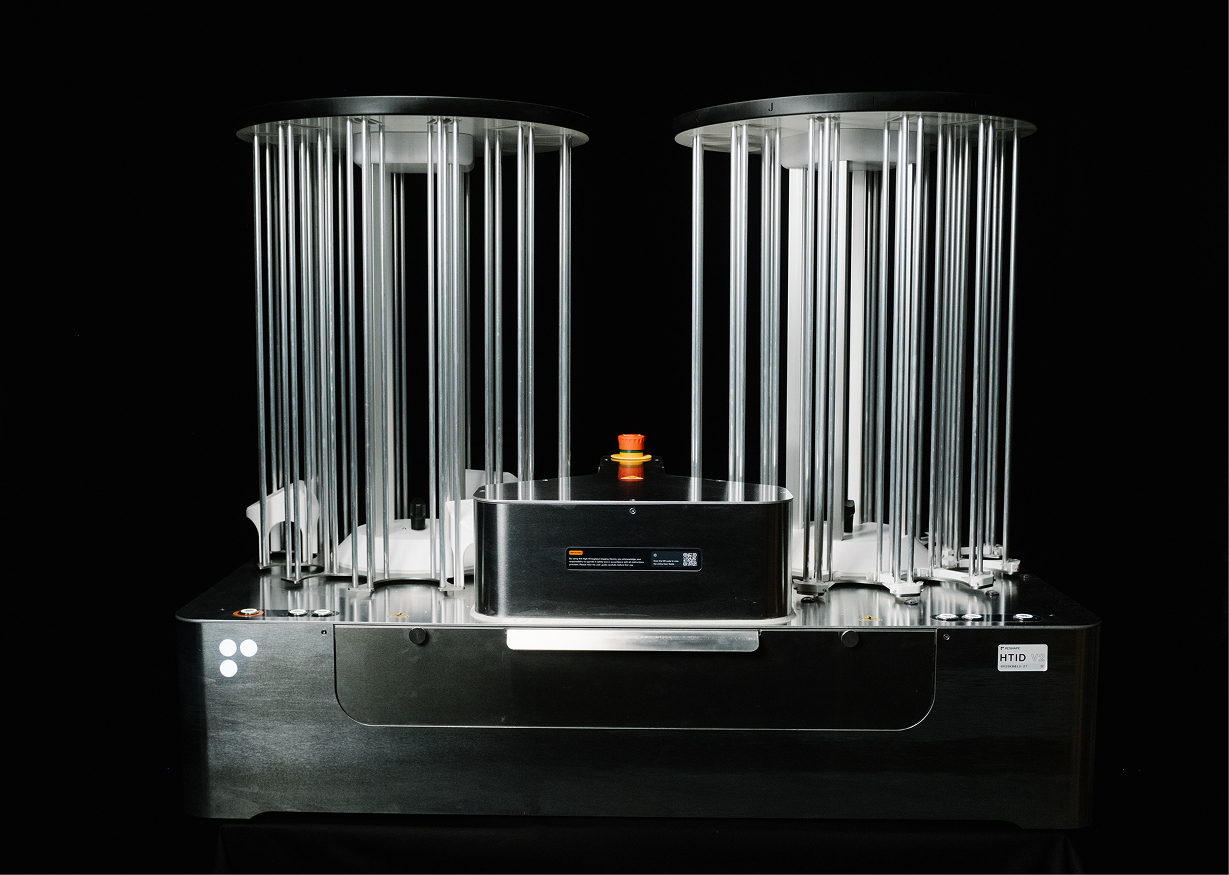

The future laboratory

By 2050, laboratories will look dramatically different. Routine manual tasks, such as counting colonies, will be replaced by automated systems. Scientists will spend less time on repetitive measurement and more time on analysis, interpretation, and innovation.

Currently, many researchers still cling to familiar methods. If a process ‘works’, there’s little drive to change it. Without bold advocates to challenge the status quo, the laboratory of the future will remain stuck in the past. And we have no time to waste if we want to solve looming world challenges.

References

1 Baker, M., 2016. 1,500 scientists lift the lid on reproducibility. Nature. 53(5334), pp. 452–454.

2 Scannell, J.W., Blanckley, A., Boldon, H. and Warrington, B., 2012. Diagnosing the decline in pharmaceutical R&D efficiency. Nature Reviews Drug Discovery, 11(3), pp.191–200. https://doi.org/10.1038/nrd3681